Computing Accurate Probabilistic Estimates of One-D Entropy from Equiprobable Random Samples

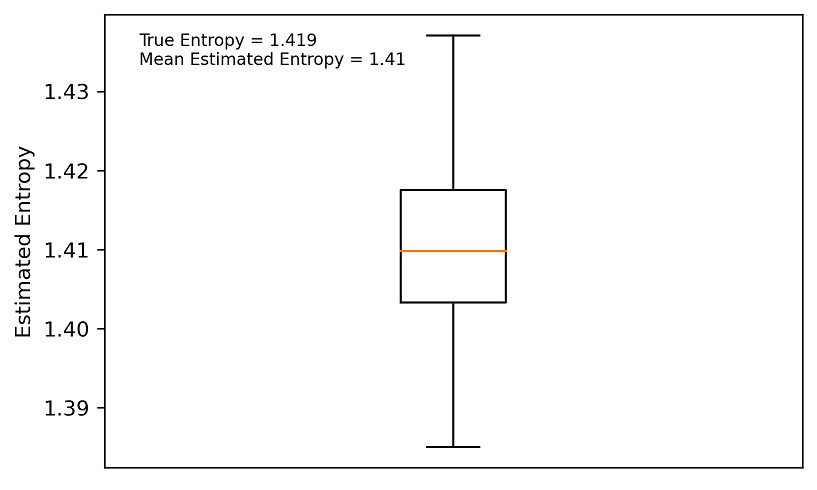

We develop a simple Quantile Spacing (QS) method for accurate probabilistic estimation of one-dimensional entropy from equiprobable random samples, and compare it with the popular Bin-Counting (BC) method. In contrast to BC, which uses equal-width bins with varying probability mass, the QS method uses estimates of the quantiles that divide the support of the data generating probability density function (pdf) into equal-probability-mass intervals. Whereas BC requires optimal tuning of a bin-width hyper-parameter whose value varies with sample size and shape of the pdf, QS requires specification of the number of quantiles to be used. Results indicate, for the class of distributions tested, that the optimal number of quantile-spacings is a fixed fraction of the sample size (empirically determined to be ~0.25-0.35), and that this value is relatively insensitive to distributional form or sample size, providing a clear advantage over BC since hyperparameter tuning is not required. Bootstrapping is used to approximate the sampling variability distribution of the resulting entropy estimate, and is shown to accurately reflect the true uncertainty. For the four distributional forms studied (Gaussian, Log-Normal, Exponential and Bimodal Gaussian Mixture), expected estimation bias is less than 1% and uncertainty is relatively low even for very small sample sizes. We speculate that estimating quantile locations, rather than bin-probabilities, results in more efficient use of the information in the data to approximate the underlying shape of an unknown data generating pdf.

PDF Abstract